This article is sectioned off weird, I apologize for that. But the information is still very good:

* To find out how to download a full Normal website (or full domain if you want), check out the middle section of this article (its sectioned off with horizonal lines, like the one you see above this sentence). Also here is a link to another article of mine from before on this very topic (downloading a full normal website and perhaps a full domain): wget full site

* To find out how to download a full Directory site (like seen in screenshots below), which is also known as an Indexed site or FancyIndexed site, check out the article below (just ignore the middle section which is seperated with horizonal lines – that section is for downloading a normal website)

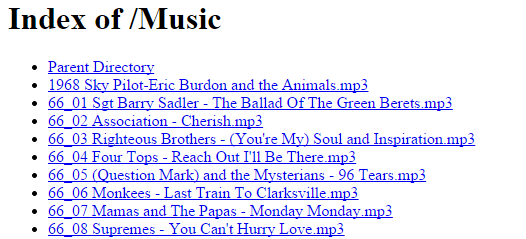

Imagine you stumbled a cross a site that looks like this:

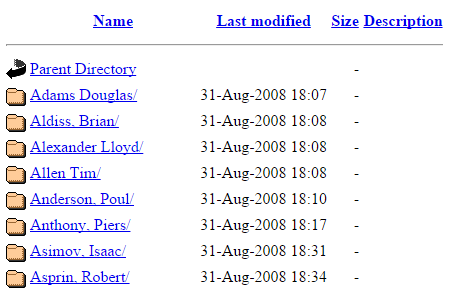

Or like this:

By the way these are called Indexed pages. The prettier ones are called FancyIndexed pages. Basically if the web server doesnt have a webpage index.html or index.php, the web server will instead generate an automatic & dynamimc index.html for you, that will show the contents of the directory like a file browser, where you can download folders – this is very useful for sharing content online, or in a local network.

PS: above pictures are from sites offering free content

How would you download everything recursively?

The answer is WGET! Here is a quick guide on it (have a quick read its fun and you will learn alot):

Regravitys WGET – A Noob’s Guide

http://www.regravity.com/documents/Wget%20%96%20A%20Noob%92s%20guide%20-%20Regravity.com.pdf

Open up a linux box, or cygwin. Make a download directory and cd into it

# make the directory (the -p will make the /data /data/Downloads and /data/Downloads/books folders, so -p will make the parent folders if they are missing) mkdir -p /data/Downloads/books # now get into the folder cd /data/Downloads/books

Here is one of the best ways to run wget (more below):

wget -nc -np --reject "index.html*" -e robots=off -r URL-GOES-HERE # example: wget -nc -np --reject "index.html*" -e robots=off -r www.example.com/books/ # what do all of these options do? # (1) --reject "index.html*": makes sure you dont download annoying dynamically generate index.html pages (the web server auto generates you a index.html, reflecting all of the files and make them look like links). If you look at the above screenshots of the web content, all of those files are sitting on the web server, but the index.html page thats showing you that page doesnt really exist. Its auto generated. If the owner were to add a file or folder, the index.html would immediately show you that new file (if you refreshed the page). # now each folder gets its own index.html (infact there is like a few to several of these index.html for each folder, one index.html for each different way you can sort the page... thats alot of index.html pages to download... and they can get pretty big if there are alot of files & folders. So its best to skip them) # (2) -nc: doesnt download files that already exist in the destination location - even if content is different (side note: remember the source is the remote server, and the destination is the local computer your downloading). if the source file is newer though, this might be bad, as the newer source file will not download. The better option instead of -nc, is to actually just use -N. -N will skip downloading a file that exists on the destination, but only if the timestamps are the same on the source(server) and destination(local). If the timestamps are different it will download the file. # (3) -np: makes it so that you only download withing your URL # for example imagine your downloading everything in www.example.com/books/ # that means you want www.example.com/books/folder1/file1.txt # and www.example.com/books/folder1/file3.txt # and www.example.com/books/file4.txt # but you definitely dont want: # www.example.com/movies/stuff # -np will make sure you stay within the books directory. # wget follows links, so without -np # if there was a link that pulled you to differentpage # it would go there. # with np option you dont have to worry about that # (4) -r: just downloads everything recursively down (so that we get all of www.example.com/books/ # (5) -e robots=off:, without this wget downloading all of this content just looks like a spider/robot walking the website. so some websites will immediately stop your whole wget command. the robots.txt tells websites like google (search engine sites) to not probe/index this site and everything recursively inside the same folder where the robots.txt file is. The content of robots.txt varies depending on how intense the webserver blocks them. Usually the robots.txt sits on the root of the page.

Now it will take a minute to download everything so its probably best to run it in a screen,nohup,dtach or tmux (one of those programs that allow you to run a script/program without being tied to the putty/terminal shell – so you can close the terminal window your on and still have the download running, and continue doing other things)

A wget within a screen example would be like this from start to finish:

# first I hope you have screen apt-get update apt-get install screen # launch screen with bash screen bash # QUICK DIVE INTO SCREEN: everything you do here is done untied from the shell, so you can close the terminal (called detaching) and get back to what you were doing - everything you left running in the screen will continue to run uninterrupted. Then when you want to come back to the screen simply just type "screen -x" (called reattaching_. Also besides closing the terminal to detach, you can simply type "CONTROL-A" wait then hit the "d" key. That will detach, you will no longer be in a screen session, and everything you do here will be tied to your putty session. Also To see all of your screen sessions type this "screen -ls". Note that "screen -x" will connect without problem if you only have 1 session. If you have several sessions (meaning you have typed this "screen bash" more than once, i.e. each "screen" / "screen bash" opens a new session), you can list all of the sessions with "screen -ls" then grab the ID (its the first number listed with each session, each session is on its own line). Then to reattach to the session of your choice, type this commmand "screen -x ID", replacing id with the session id. So it would look like this "screen -x 3512" if the session id was 3512. # make the folder mkdir -p /data/Download/books cd /data/Downloads/books # start the download wget -nc -np --reject "index.html*" -e robots=off -r www.example.com/books/ # now get off of the screen/detach by closing putty, or your terminal, or simply do this key command "CONTROL-A d". # to reattach simply type "screen -x"

Tip (specifying & making destination/dump folder with command line arguments of wget):

Instead of making a directory and “cding” into i, you can save some typing by using the -P argument. You can make wget make the folder for you and dump all content into that directory (it will still preserve the directory tree structure of the remote webiste your downloading) those steps with the -P. The -P will make the folder and dump the files there (it just wont step you into the directory)

# below will create the folder /data/Downloads/books and it will dump the contents of www.example.com/books/ into there. It will do so recursively and skip the annoying index.html pages which are dynamically generated with these "indexed"/"fancyindexed"-browser-style-html-share-pages - so it does everything the above commands do, just it also makes the folder for you. wget -nc -np --reject "index.html*" -e robots=off -r www.example.com/books/ -P /data/Downloads/books # also note that -P works with absolute and relative paths (just like most of anything with linux). so this would dump everything to /data/Downloads/books as well cd /data/Downloads wget -nc -np --reject "index.html*" -e robots=off -r www.example.com/books/ -P books

The entire website of www.example.com/books(and every file and folder in there) will get downloaded into /data/Downloads/books.

The other alternative was

mkdir -p /data/Downloads/books cd /data/Downloads/page1 wget -nc -np --reject "index.html*" -e robots=off -r www.example.com/books/

Downloading a normal website / dont skip index.html files:

If you want to download a full site, that is just offering normal web content. The remove the –reject “index.html*” part so that index.html pages (and any variation of index.html* gets downloaded as well).

So it will look like this:

wget -nc -np -e robots=off -r www.example.com/wiki/

If you want to dump everything to a specific folder do it like this:

mkdir -p /data/Downloads/wiki cd /data/Downloads/wiki wget -nc -np -e robots=off -r www.example.com/wiki/

Or with one command:

wget -nc -np -e robots=off -r www.example.com/wiki/ -P /data/Downloads/wiki

Note when downloading a web page you have to think about the web page not wanting other people to mirror their site. So they probably put alot of protection against that. Some things that can help are user agent argument and –random-wait argument, both of which are lightly covered below. However links that cover both more indepth are below.

To download normal content website (instead of a file share/file&folder browsing/indexing/fancyindexing website) look at these notes:

############################################ # The Command to download a normal website # ############################################ # these commmands will vary depending on how you want the content to save on your drive (modify links or not, what files to download) and depending on how secure the website is against bots (do you need to use random wait or ratelimiting - most likely yes, do I need to pretend to be like a normal browser and use a useragent command) wget -U 'Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.9.0.3) Gecko/2008092416 Firefox/3.0.3' --limit-rate=200k -nc -np -k --random-wait -r -p -E -e robots=off www.example1.com/somesite -P /dumphere # -P /dumphere: downloads everything to /dumphere # (DIDNT USE ABOVE) --domain=infotinks.com: If didnt include because this is hosted by google so it might need to step into googles domains # --limit-rate=200k: Limit download to 200 Kb /sec (k or K for Kilobytes, m or M for megabytes, g or G for Gigabytes). Want to keep this between 10k and 200k if you want to download a full website and not look like a bot. # -nc/--no-clobber: don't overwrite any existing files (used in case the download is interrupted and resumed). # (OPTIONAL & CHANGES CONTENT) -k/--convert-links: convert links so that they work locally, off-line, instead of pointing to a website online # --random-wait: Random waits between download - websites dont like their websites downloaded # -r: Recursive - downloads full website # -p: downloads everything even pictures (same as --page-requisites, downloads the images, css stuff and so on) # -E: gets the right extension of the file, without most html and other files have no extension # -e robots=off: act like we are not a robot - not like a crawler - websites dont like robots/crawlers unless they are google/or other famous search engine # -U mozilla: pretends to be just like a browser Mozilla is looking at a page instead of a crawler like wget. Replace mozilla with any other "user agent" line # (DIDNT USE ABOVE) if authentication required: # (DIDNT USE ABOVE) --http-user=user1 # (DIDNT USE ABOVE) --http-password=password1 ### SOME USER AGENT STRINGS ### # * user agent strings assure the web server you are a browser instead of a robot, but other things assure it as well (like random-wait, not downloading things serially one after another, rate-limiting, etc) # * You can get other user strings by looking at the http headers of the http request your browser sends when they request a site # Ignore the first # with each user agent string, thats just to show you where the line starts and also to comment it out # IE6 on Windows XP: Mozilla/4.0 (compatible; MSIE 6.0; Microsoft Windows NT 5.1) # Firefox on Windows XP: Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.14) Gecko/20080404 Firefox/2.0.0.14 # Firefox on Ubuntu Gutsy: Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.8.1.14) Gecko/20080418 Ubuntu/7.10 (gutsy) Firefox/2.0.0.14 # Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.9.0.3) Gecko/2008092416 Firefox/3.0.3 # Mozilla/4.5 (X11; U; Linux x86_64; en-US # Safari on Mac OS X Leopard: Mozilla/5.0 (Macintosh; U; Intel Mac OS X; en) AppleWebKit/523.12.2 (KHTML, like Gecko) Version/3.0.4 Safari/523.12.2 # Googlebot/2.1 (+http://www.googlebot.com/bot.html)

After this back we are not back on the topic of downloading from an indexed site (one thats sharing files and folders like in the screenshots at the top)

BONUS (random wait and authenticated pages & continous):

3 more interesting arguments. Followed by the winner commands.

–random-wait: to make your downloads look less robotic, random timer waits a moment before downloading the next file.

–http-user and –http-password: if the website is password protected and you need to login. Doesnt work if the login is POST or REST based.

-c or –continue: resumes a download where it left off. So if a download cancelled or failed, when you relaunch the same command it will try and download where it left off. This will only work if the webserver(source) supports it. If it doesnt then it will just start over. So its hit and miss with -c, but it doesnt hurt to have it. When you use -c you cant use -nc, because they logically go against each other. -nc states that it will not download the file if the file (or more correct, if a file with the same name) already exists on destination & -c states that it will try to download the file again & resume if it can. so you might ask why is -nc good? well because you could have the same filename but with different content and you dont want to overwrite it. The question is do you always need -c? No you only need it when your resuming the download, but like I said it doesnt hurt to have it. So just leave it on. This site shows -c in action http://www.cyberciti.biz/tips/wget-resume-broken-download.html

# downloading from a website with a password: wget -r -nc -np -e robots=off --reject "index.html*" --http-user=user1 --http-password=password1 www.example1.com/books-secure/ # downloading with resume (notice I didnt include -nc, because -c and -nc dont work together. -nc wants to skip files that are there, even if they are not fully downloaded - the reasoning that it might be a different file, -c wants to try and resume) wget -r -c -np -e robots=off --reject "index.html*" www.example1.com/books-secure/ # downloading with random-wait wget -r -nc -np -e robots=off --reject "index.html*" --random-wait www.example1.com/books-secure/

The Winners for best commands:

################# # AUTH REQUIRED # ################# # No Clobber (doesnt overwrite, skips if file exists) wget -r -nc -np -e robots=off --reject "index.html*" --random-wait --http-user=user1 --http-password=password1 www.example1.com/books-secure/ # Resume supported wget -r -c -np -e robots=off --reject "index.html*" --random-wait --http-user=user1 --http-password=password1 www.example1.com/books-secure/ ##################### # AUTH NOT REQUIRED # ##################### # No Clobber (doesnt overwrite, skips if file exists) wget -r -nc -np -e robots=off --reject "index.html*" --random-wait www.example1.com/books-secure/ # Resume supported wget -r -c -np -e robots=off --reject "index.html*" --random-wait www.example1.com/books-secure/ ## Tips, For all four commmands above: # 1) if downloading a normal website and you need the index pages then remove the '--reject "index.html*"' argument. That way you will download the index.html files as well # 2) If you can get away without using --random-wait then do it, as your total time to completion will be shorter, because you will be waiting alot less. As downloads will start one after another. Without --random-wait, There is no waiting. # 3) Add to the very end "-P destinationdirectory" if you want to dump to destinationdirectory (it will make the destination directory for you, and all dumps will go there)

The end.

More info (trying to not be a bot): Check out user agent, pretending to be a browser. You see running wget half of the time is battling servers against their bot tracking abilities. your not a bot, but wget comes off as a bot. so we have things like -e robots=off, and –random-wait, and user agent command (to find out more about user agent argument check out this link http://fosswire.com/post/2008/04/more-advanced-wget-usage/ )

Also more info on the man page, this man page is really well done: http://www.gnu.org/software/wget/manual/wget.html